Krake wins nominated for

Our open-source project Krake has been awarded the Saxon Digital Prize 2024 in the Open-Source category. After being selected by a jury of experts, Krake also beat two other nominees in the public vote. We would like to thank everyone who supported us in the voting and voted for Krake.

What is Krake?

The Krake orchestrator engine can be used to automatically manage containerised workloads on distributed and heterogeneous cloud platforms. Find out more about the open source software solution, its features and the use cases below.

Why Krake?

The challenges

Computing jobs are real energy guzzlers, and AI applications in particular consume significantly more computing power and energy than conventional computing jobs due to their size and complexity. The resulting conflict between serious CO2 footprints and AI applications, which have the potential to overcome our ecological and economic challenges, must not be neglected.

Solution & advantages

This is where Krake comes into play. Krake [ˈkʀaːkə] is an orchestrator engine for containerised and virtualised workloads on distributed and heterogeneous cloud platforms.

With Krake, cloud users can distribute their virtual workloads - in addition to typical metrics such as hardware, latencies and costs - primarily on the basis of energy efficiency-related criteria.

Krake does this by creating a thin aggregation layer on top of the various platforms (such as OpenStack or Kubernetes) and making them available to the cloud user via a single interface.

Field of application

Krake can be used for a variety of application scenarios in which the processing of virtual computing jobs or the utilisation of the digital infrastructure is to be optimised.

Features

Modularity

The architectural components of Krake, which act as microservices in the background, are loosely connected and can be started, customised or exchanged with your own developments independently of each other.

Intelligent workload distribution

Based on individual metrics and labels, Krake can automatically and intelligently decide where a virtualised workload should be executed.

Scalability

Kubernetes clusters can be rolled out, customised and scaled with the help of Krake and infrastructure-providing software (e.g. the Infrastructure Manager). This can be done both manually and automatically.

Open Source

Krake is fully open-source and thus benefits from many advantages, such as a strong community, high quality and security through peer reviews or the promotion of interoperability or long-term accessibility of the code.

Use Cases

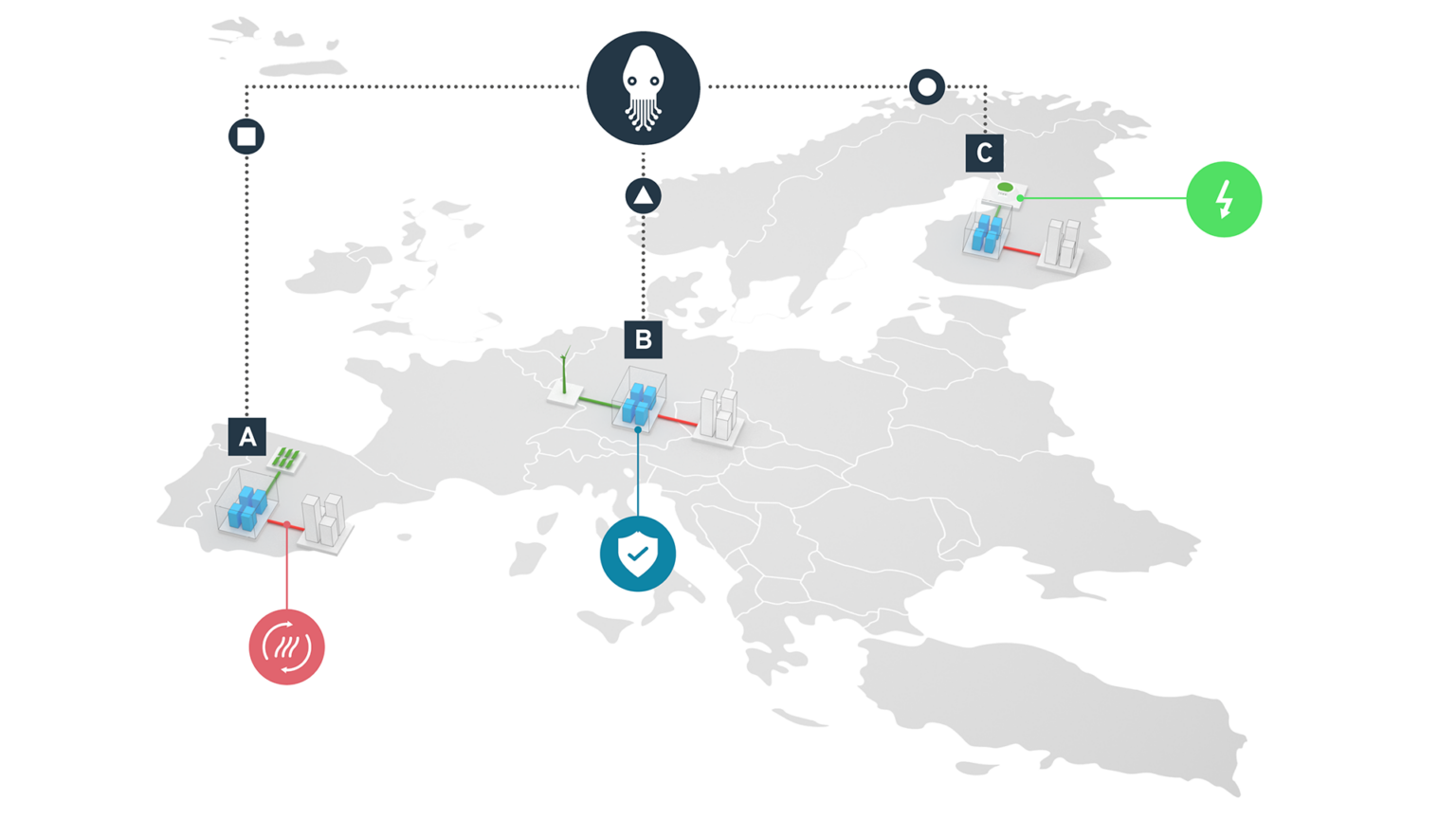

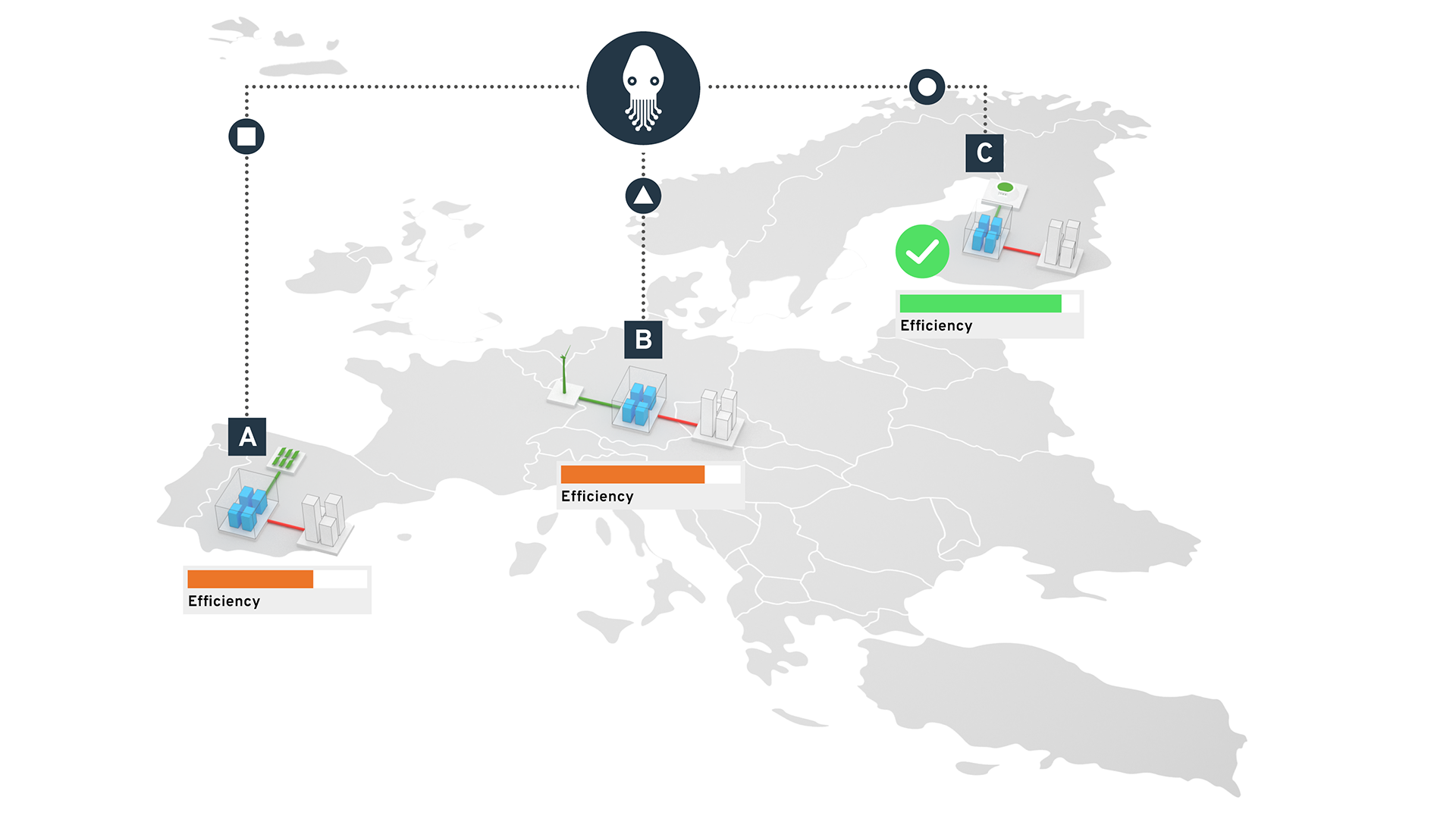

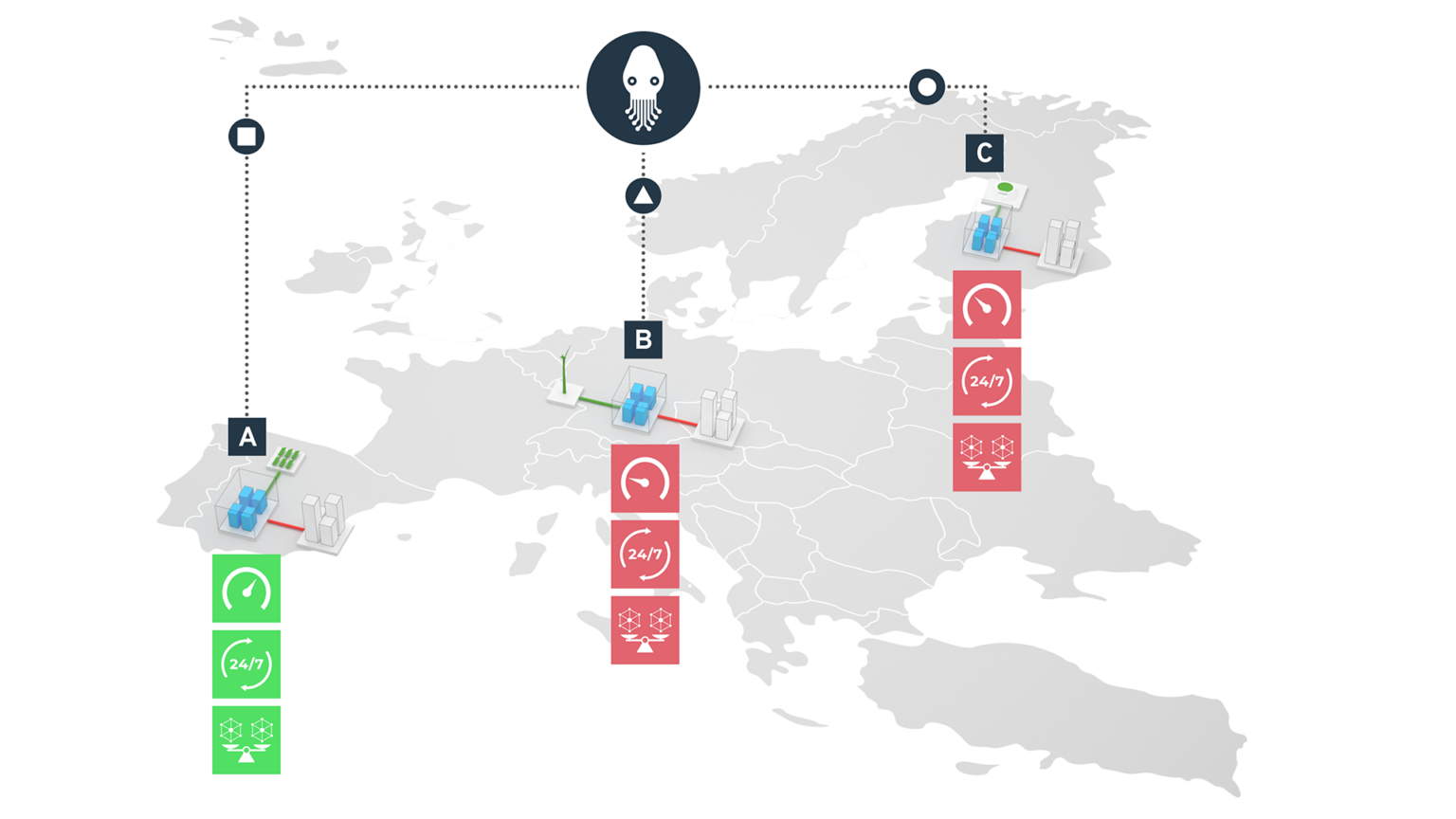

In a distributed digital infrastructure, Krake can be used to process virtualised workloads at precisely the locations that are optimal from an ecological, technical or economic perspective at any given time. Users can individually define the metrics for the calculation of optimal execution locations. This results in the following example scenarios:

1. requirement-optimised workload distribution

The use of Krake can ensure that a virtualised workload is always processed at the optimum location. First, Krake preselects the locations that fulfil all the requirements of the application (e.g. technical restrictions). Krake can then select the best location for processing the workload, for example from an energy optimisation perspective.

For example, a compute job could contribute to heating a building due to its high computing load at location A, while workloads are processed at location B to fulfil very specific data security requirements. Workloads that are processed at location C, on the other hand, benefit most from particularly green energy, so that the CO2 footprint of the workload is minimised. Depending on how Krake is to optimise the placement of the workload, controlled by metrics and labels, it is placed where the conditions for processing the respective compute job are most efficient.

2. energy-optimised placement of workloads

If the distributed digital infrastructure is supplied at its locations by different energy suppliers, such as a wind turbine, a hydroelectric power plant or a solar farm, Krake could be used to place the workloads at the exact location where the most renewable energy is generated. Depending on the weather conditions and the current energy requirements of the power grid, Krake could distribute the workloads in such a way that the most efficient utilisation of renewable energy is achieved.

3. placement of workloads based on further individual metrics

Even if Krake is primarily suitable for placing workloads as sustainably as possible, there are no limits to creativity in its application. Scenarios are conceivable in which Krake places workloads on a time-controlled basis or moves workloads based on service parameters (e.g. network latency). For example, Krake can always place workloads with particularly critical accessibility in data centres that have high network stability, increased accessibility and low latency.

Documentation & Source Code

Cooperative software tools

Krake can be used to control Cloud & Kubernetes cluster resources in order to distribute workloads across them. In addition, Krake also offers the option of creating Kubernetes cluster resources using other tools. This function is currently supported in Krake by the Infrastructure Manager (IM). A similar approach for creating cloud resources (OpenStack) is planned with Yaook and is currently being implemented.

Videos about Krake

ALASCA Tech Talk

By loading the video, you accept YouTube's privacy policy.

Learn more

Krake is introduced

AI Sprint

By loading the video, you accept YouTube's privacy policy.

Learn more

Krake in the "AI Sprint" project

Meetings

Community chat

Meeting-notes

You want to actively participate in Krake and let your ideas and skills flow into our open-source project?

Find out here how to become part of the developer community around Krake and come aboard:

A project by ALASCA

In November 2023, Krake found its new home at ALASCA - a non-profit organisation for the (further) development of operational, open cloud infrastructures. ALASCA's mission revolves around the further development and provision of open source tools that not only enable but also facilitate the development and operation of customised cloud infrastructures.

In addition to the practical development work on these projects, ALASCA also sees itself as a provider of knowledge on these topics - not only within the organisation, but also to the outside world, for example in the form of the ALASCA Tech Talks.

With a strong, motivated community and the combined expertise of its members, ALASCA is driving forward digital sovereignty in Germany and Europe in the long term - also in collaboration with other open source initiatives and communities in the digital sector.