Welcome to Krake.

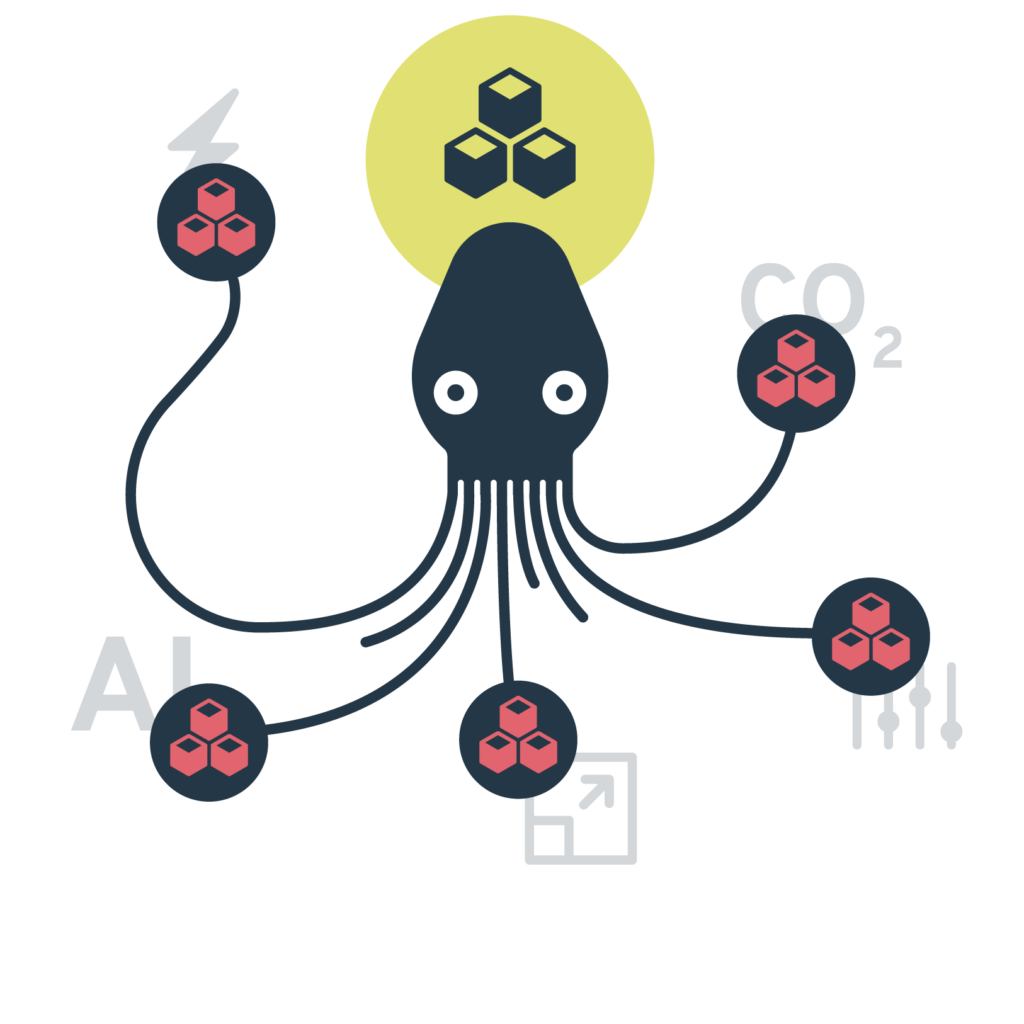

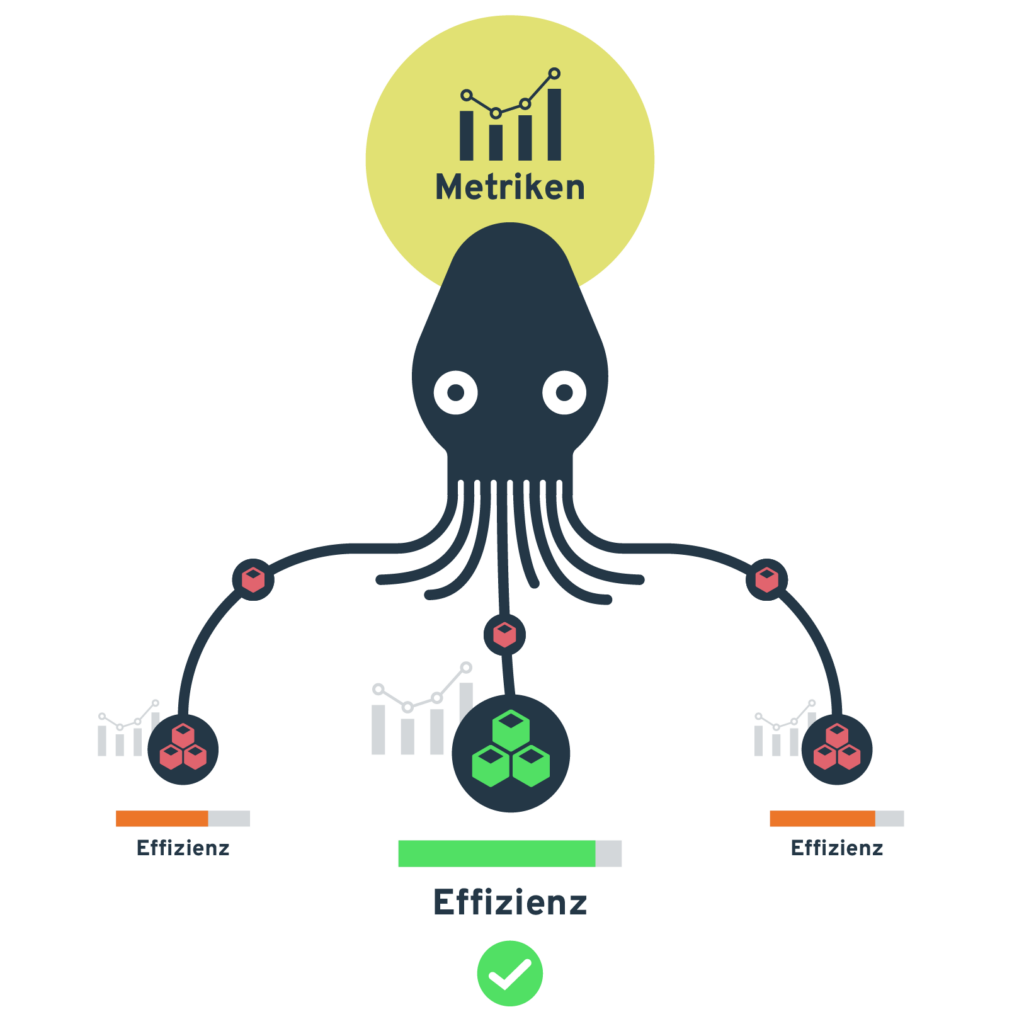

Optimised distribution of workloads based on individual metrics.

On an open source basis.

On an open source basis.

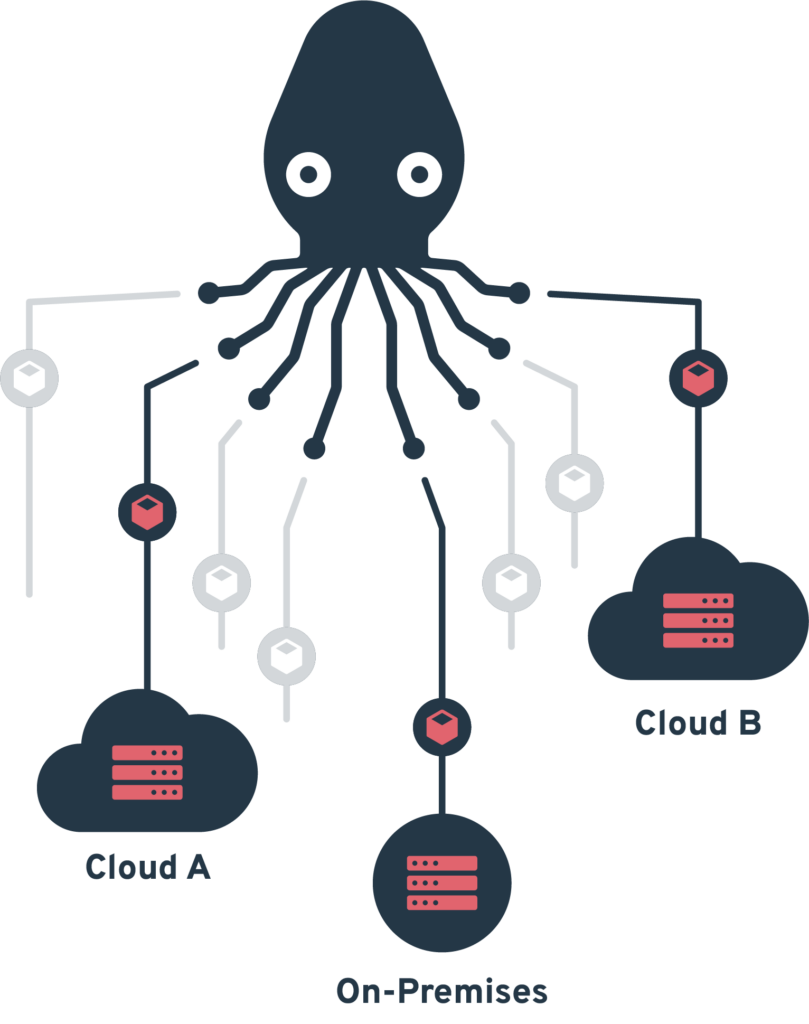

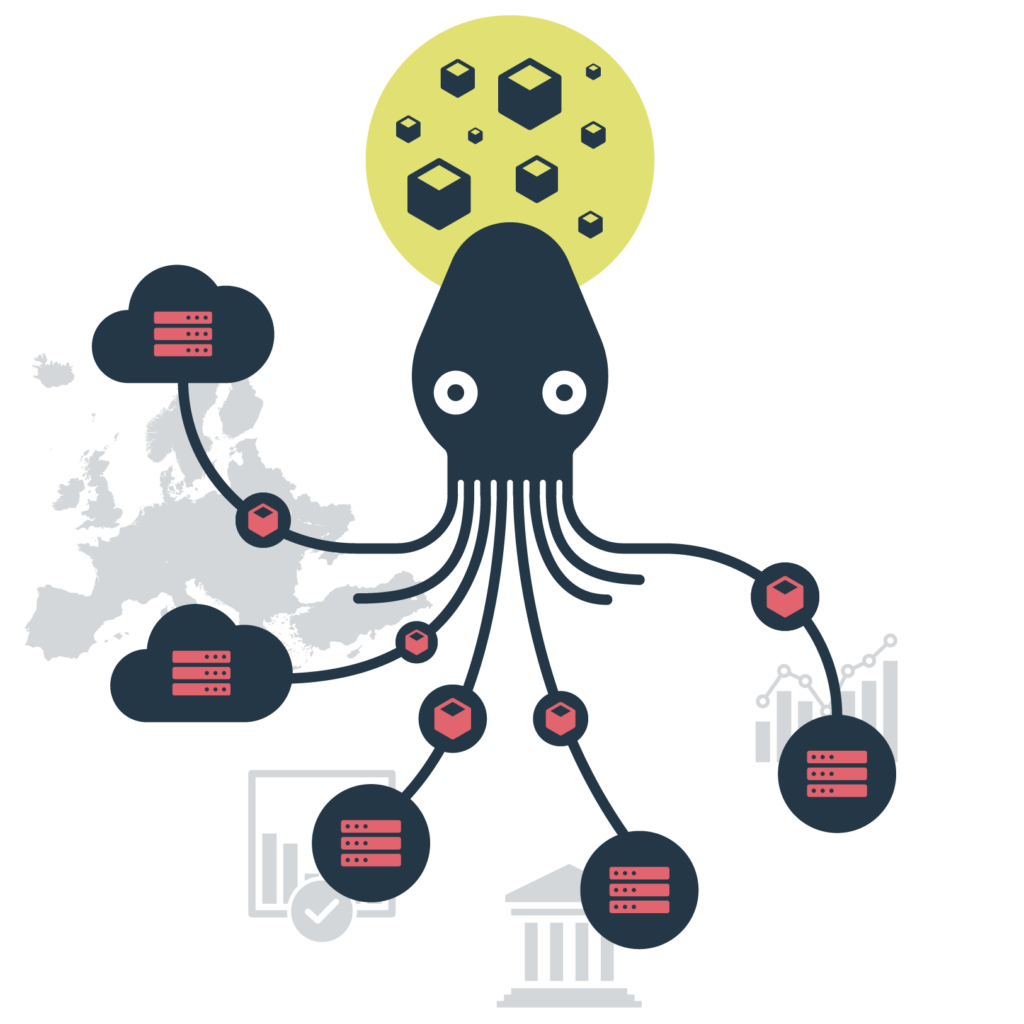

Whether multi-cloud, private cloud or on-premise: In a distributed digital infrastructure, the open source-based workload manager Krake [ˈkʀaːkə] enables the efficient distribution of virtualised and containerised workloads to those execution locations that are optimal from an ecological, technical or economic point of view. The corresponding metrics can be freely selected and configured by the user.

Managing containerised applications on distributed infrastructures requires intelligent orchestration - based on various metrics and with minimal administrative effort.

Computing-intensive workloads, especially AI applications, cause high energy costs and CO₂ emissions - from development environments to business-critical production systems.

Krake orchestrates container workloads via a standardised interface on distributed Kubernetes clusters - across locations and automatically.

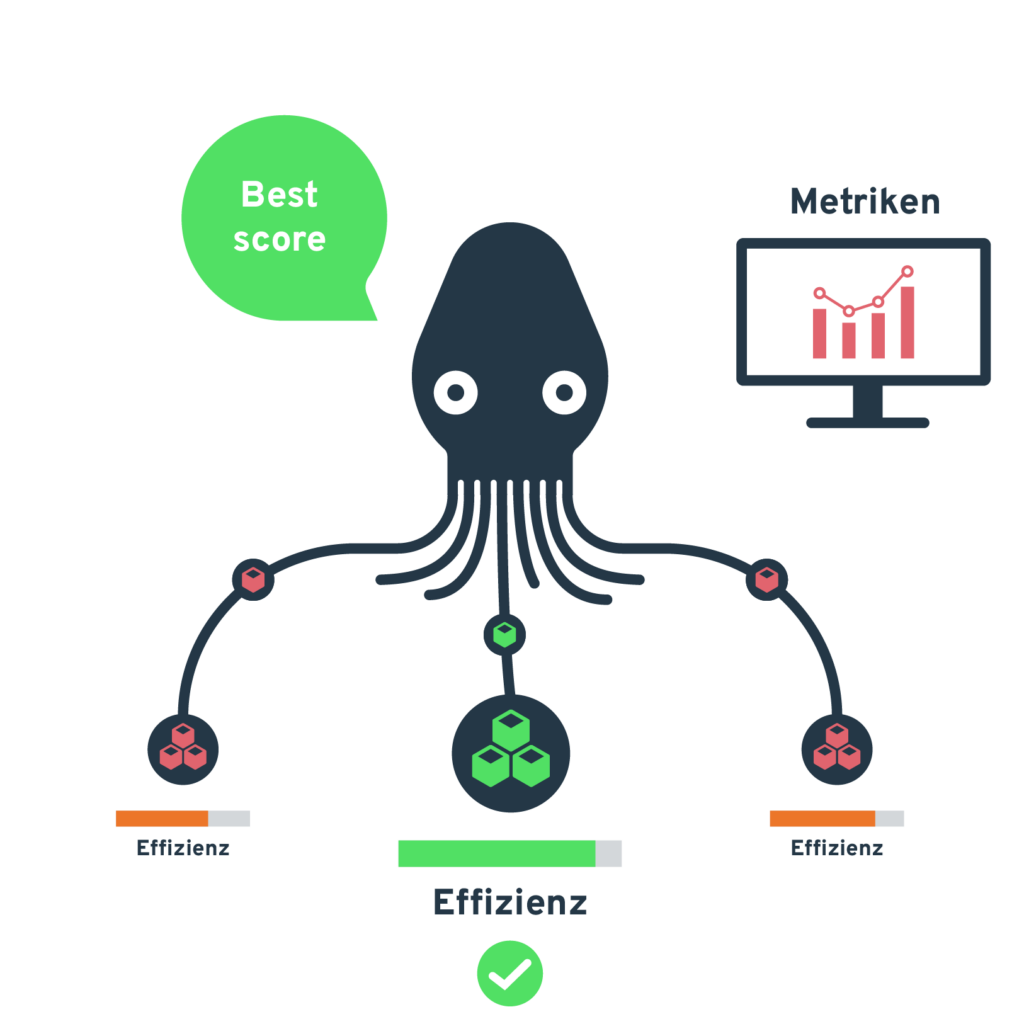

Users define individual metrics for workload distribution: performance, costs, energy efficiency and security requirements are taken into account in real time.

From local development environments to geographically distributed production systems, Krake optimises container workloads of all sizes, from microservices to AI training environments.

Standardised interface for managing distributed Kubernetes clusters via a central abstraction level.

Microservice-based components enable flexible customisation and integration of your own developments into the orchestration pipeline.

Orchestration of Kubernetes workloads across different clusters and locations.

Automated container workload distribution based on configurable metrics such as latency, energy and costs and user-defined parameters.

Fine-grained control of workload distribution through user-defined labels and constraints.

Orchestration of both stateless and stateful container applications - from microservices to complex database systems.

Automated provisioning and scaling of Kubernetes clusters via various infrastructure providers.

Krake is completely open source and enables collaboration and transparency at every step.

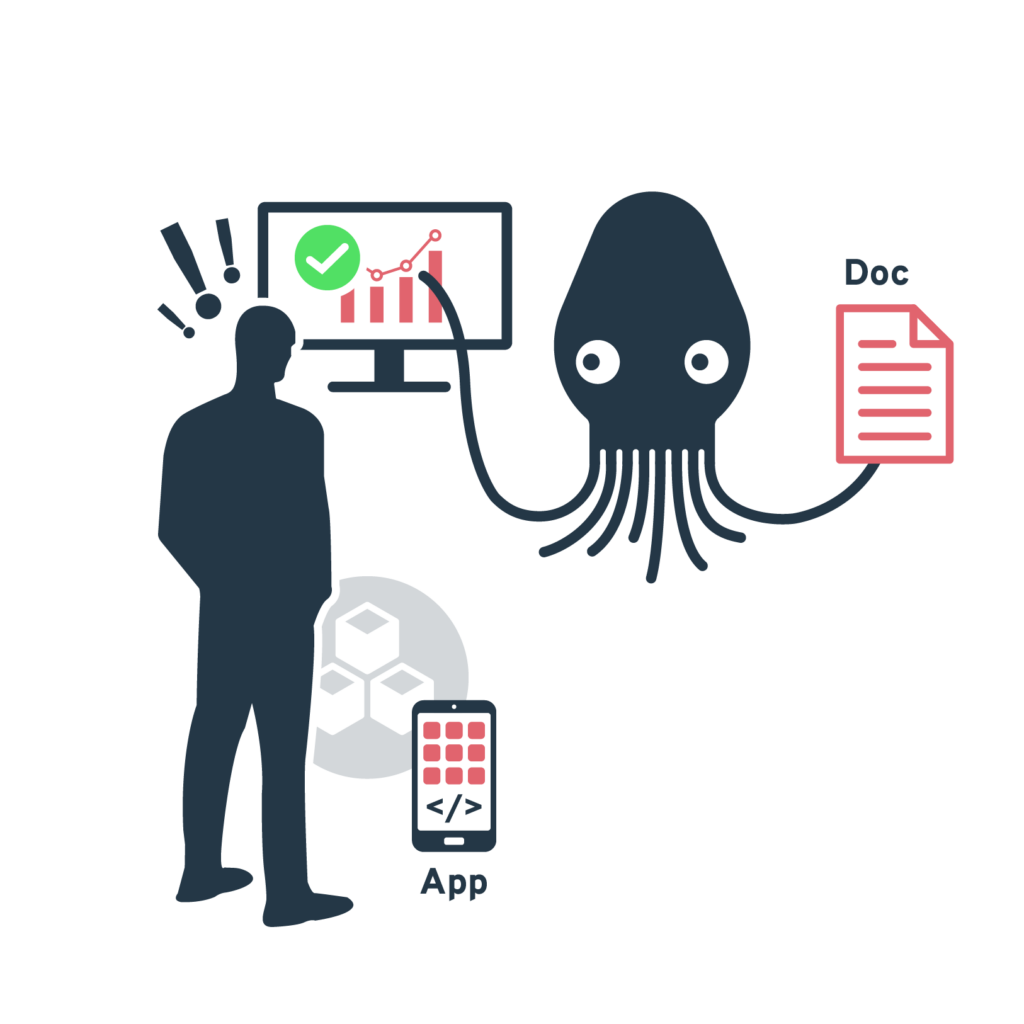

Define your individual metrics and metrics provider (e.g. Prometheus). These determine which criteria Krake uses to optimise your workload placement.

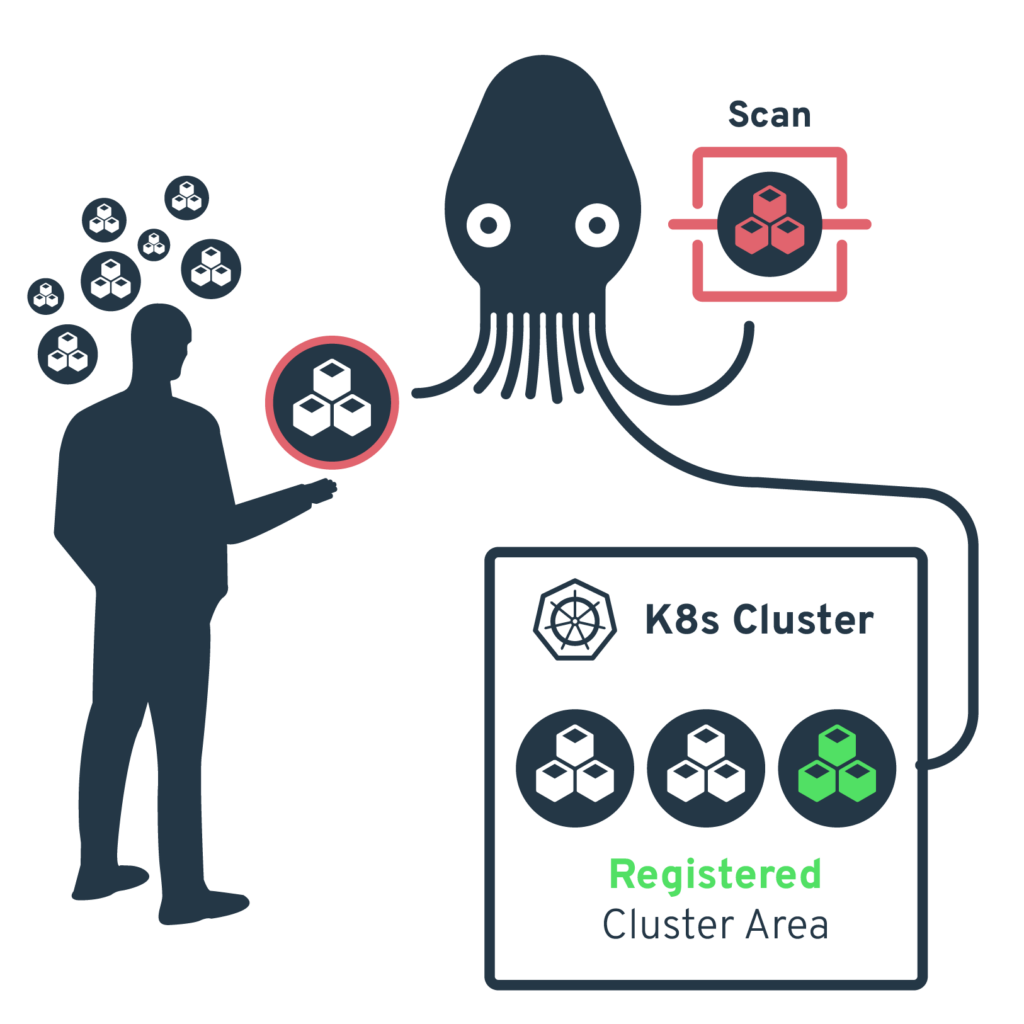

Register your Kubernetes clusters with Krake. The clusters can be operated both locally and in the cloud.

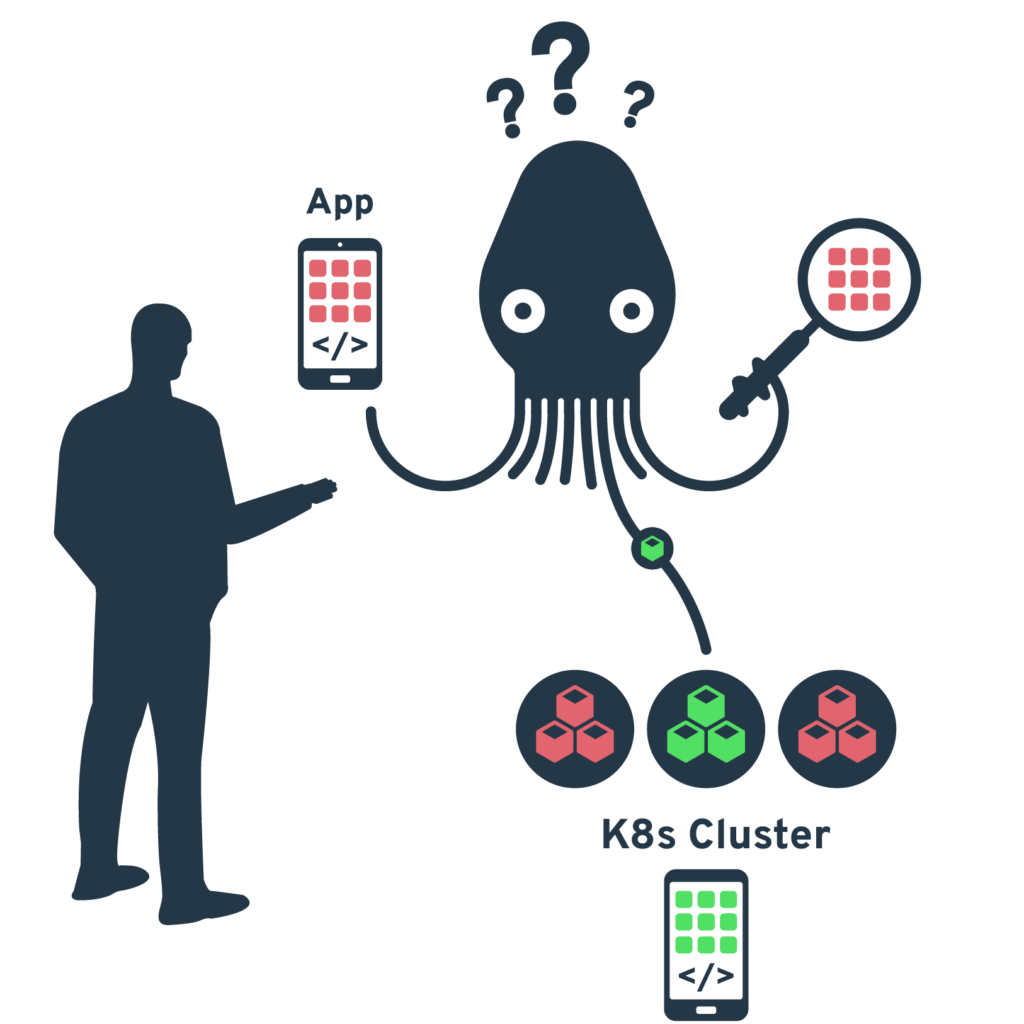

Roll out your containerised applications. Krake analyses the defined metrics and automatically places the workloads on the most suitable clusters.

Krake continuously monitors the metrics and automatically adjusts the placement of workloads as required - for optimum performance and resource utilisation.

Efficient use of resources in the local data centre

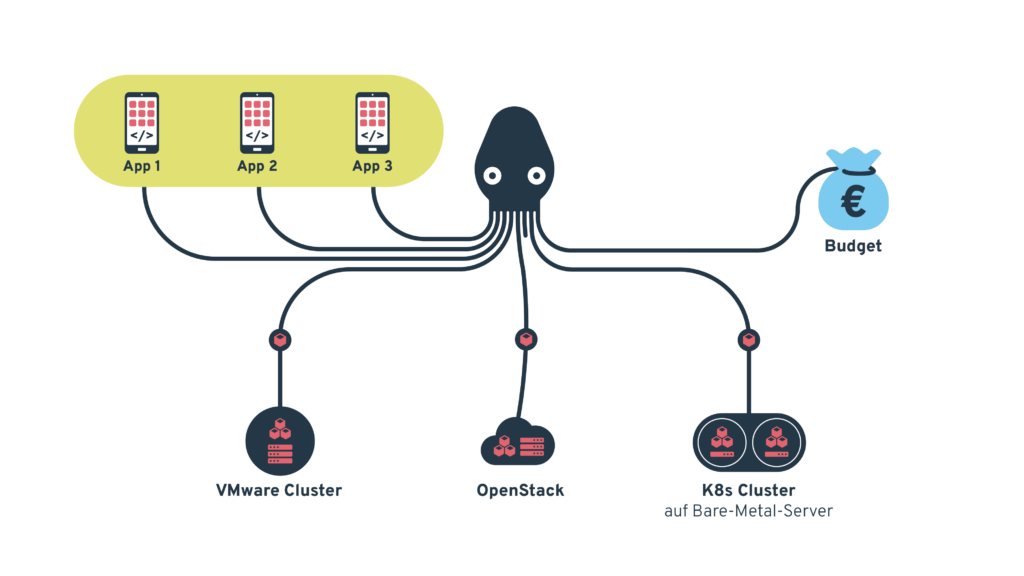

A medium-sized software company uses Krake to integrate its heterogeneous IT infrastructure, consisting of a classic VMware cluster, two Kubernetes clusters on bare-metal servers and an OpenStack installation. To enable Krake to operate, the VMware cluster and the OpenStack deployment are supplied with Kubernetes clusters. The main challenge lies in the standardised management of these different platforms and the optimal use of resources with a limited IT budget.

Krake creates a standardised management level and enables:

- Automatic distribution of development and test environments

- Load-dependent scaling of customer applications

- Seamless maintenance windows thanks to automatic workload relocation

Measurable improvements:*

- Increase in average server utilisation from 45% to 75%

- Reduction of administration time by 60% through centralised management

- Deployment time for new development environments reduced from 2 days to 30 minutes

- Energy saving of 25% through optimised resource distribution

- Zero downtime during maintenance work thanks to automatic workload migration

*Fictitious values

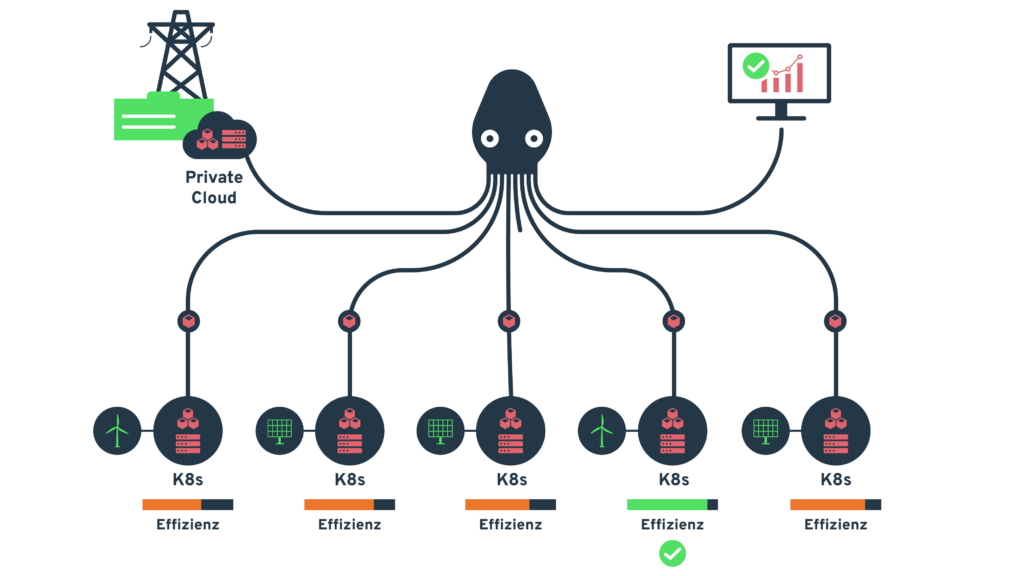

Intelligent workload distribution at a regional energy supplier

A regional energy supplier uses Krake to orchestrate its hybrid IT infrastructure, which consists of OpenStack-based private clouds and Kubernetes clusters at five locations. The particular challenge lies in optimising the use of fluctuating energy generation from its own wind and solar parks while at the same time ensuring security of supply.

Krake orchestrates the container workloads based on a multi-dimensional optimisation model:

- The availability of renewable energy is weighed up in real time against latency requirements and resource needs

- Non-time-critical analyses of smart meter data are automatically shifted to locations with energy surpluses

- Critical applications such as network monitoring are always operated within defined latency limits of a maximum of 10 ms

Measurable improvements:*

- Increase in the proportion of renewable energies in the data centre network from 60% to 85%

- Reduction of IT energy costs by 45%

- Reduction of the average response time of critical applications by 30%

- Automation of 95% workload distribution, which was previously controlled manually

Thanks to Krake's central management interface, the DevOps team was able to switch from a site-specific to a service-oriented organisational structure, which significantly accelerates the development of new services.

*Fictitious values

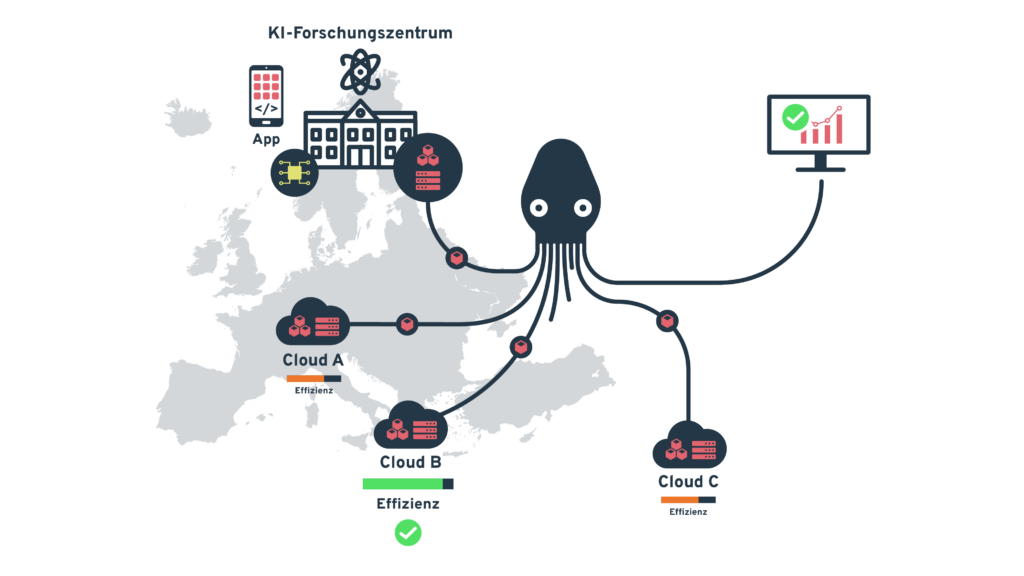

AI training on hybrid cloud infrastructure in Europe

A European AI research consortium uses Krake to orchestrate its machine learning workloads via a hybrid infrastructure that includes its own data centres as well as Kubernetes clusters from several cloud providers. The key challenge is to optimise the use of different pricing models and hardware resources (specialised GPUs, TPUs, FPGAs) and to comply with data protection requirements.

Krake orchestrates the workloads based on several parameters:

- Costs of the various cloud providers and locations

- Availability of special hardware accelerators

- Data protection requirements (e.g. GDPR-compliant processing)

- Energy efficiency of the locations

- Network latency for distributed training

Measurable improvements:*

- Reduction of training costs by 55% through dynamic use of favourable cloud resources

- Reduce training time of complex models by 40% through optimised hardware allocation

- Energy savings of 40% by shifting workloads to energy-efficient locations

- 100% GDPR compliance through rule-based workload distribution

- Automation of 90% of the previously manual scheduling decisions

*Fictitious values

Our open source project Krake was awarded the Saxon Digital Prize 2024 in the Category Open Source After being selected by a jury of experts, Krake also beat two other nominees in the public vote.

We would like to take this opportunity to thank everyone who supported us in the voting and voted for Krake.

(A video production by Sympathiefilm GmbH)

Krake is introduced

Krake in the "AI Sprint" project

In November 2023, Krake found its new home at ALASCA - a non-profit organisation for the (further) development of operational, open cloud infrastructures. ALASCA's mission revolves around the further development and provision of open source tools that not only enable but also facilitate the development and operation of customised cloud infrastructures.

In addition to the practical development work on these projects, ALASCA also sees itself as a provider of knowledge on these topics - not only within the organisation, but also to the outside world, for example in the form of the ALASCA Tech Talks.

With a strong, motivated community and the combined expertise of its members, ALASCA is driving forward digital sovereignty in Germany and Europe in the long term - also in collaboration with other open source initiatives and communities in the digital sector.